Compare Traditional vs. Cloud Load Balancing

Traditional Network-Based Load Balancing

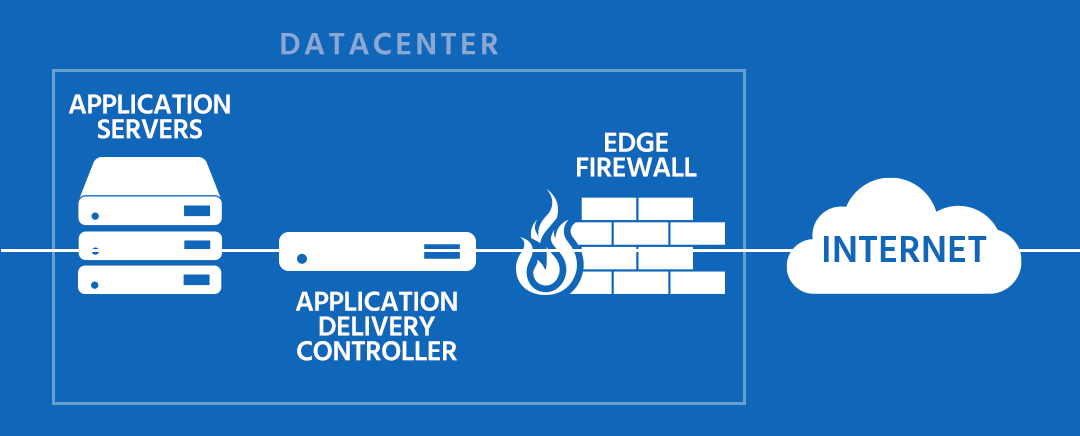

- The user (client) connects to the Internet and requests a service (e.g. a website).

- DNS routes the user to a specific IP address at a specific datacenter

- The user is connected to the load balancer.

- The load balancer accepts the connection, and after deciding which server (or host) should receive the connection, changes the destination IP (and possibly port) to match the service of the selected host.

- The server accepts the connection and responds back to the original source, the client, via its default route, the load balancer.

- The load balancer intercepts the return packet from the host and now changes the source IP (and possibly the port) to match the virtual server IP and port, and forwards the packet back to the client.

- The client receives the return packet, believing that it came from the virtual server, and displays the content.

Global Cloud Load Balancing

Global Cloud Load Balancing uses the same basic principle, except on a significantly larger scale. While the fundamental goal of spreading traffic across a range of servers still exists, Total Uptime Cloud Load Balancing takes it to the next level. We can’t deny that there is a need for load balancing within the datacenter, but today there is an even greater need to extend the benefits of load balancing to a global audience, routing users and traffic to the nearest datacenter that can deliver the levels of application performance and availability they require.

Global load balancing works like this:

- The user (client) connects to the Internet and requests a service (e.g. a website).

- DNS routes the user to a specific IP address which is connected to the Total Uptime IP anycast network.

- The user is connected to the closest, local Total Uptime node (data center).

- The Total Uptime node accepts the connection and, based on customer specified policy, decides which of the customer’s data centers to send the user to (or optionally route the client to a data center close to them based on geographic proximity**).

- The user is directed to the customer’s datacenter containing the desired application content.

- Content is delivered to the user via the local Total Uptime cloud node. If a subscription to acceleration is active, content is fast-tracked across the Internet back to the user.

- Additional network-based load balancing may still be used to spread the load over a server farm as above.

** As shown in the diagram above, it is possible to direct users from APAC to one data center (perhaps closest to the users in that region), users from the USA to another, users from the EU to another etc. We offer a custom add-on for $49/month (for any plan) that upgrades our interface to allow regional control. By default, the interface allows you to manage a single, global configuration (e.g. all users go to data center A until it goes down, then route to data center B. Or, all users load balance between data center A and B and if one goes down, it is taken out. These are only two examples of dozens of possibilities). Ask your account manager for further information about this GEO routing capability.